So I got round to reading the original paper about automatically predicting who’s likely to be a troll. This was always likely to be fun:

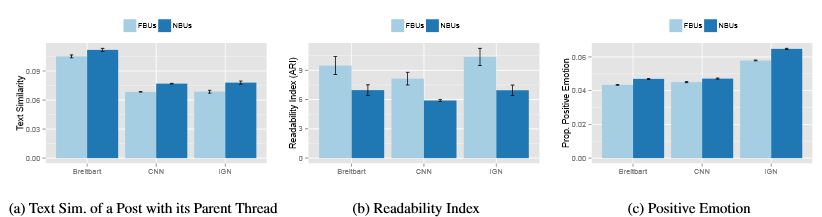

Defining trolls as those who get banned for trolling, a pragmatic solution if nothing else, they obtained a large corpus of comments from three high-volume sources, CNN, a gamer news site, and Breitbart. (Clearly they weren’t about to risk not finding enough trolls.) They paid people to classify the comments on various metrics, and also derived a lot of algorithmic metrics, and used this to train a machine learning model to guess which users were likely to be banned down the line.

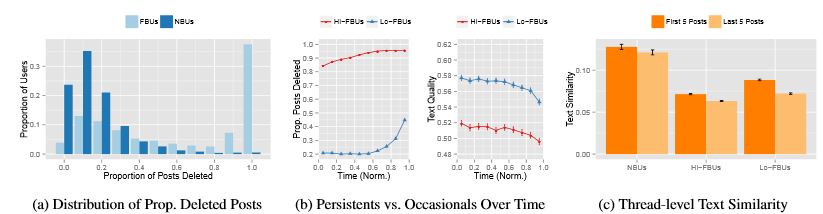

The results are pretty fascinating. For a start, there are two kinds of troll – ones who troll-out fast, explode, and get banned, and ones whose trollness develops gradually. But it always develops, getting worse over time.

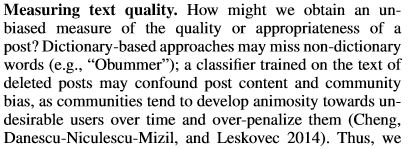

In general, we can conclude that trolls of all kinds post too much, they obsess about relatively few topics, they are often off topic, and their prose is unreadable as measured by an automated index of readability. Readability was one of the strongest predictors they found. They also generate lots of replies and monopolise attention.

Not surprisingly, predictions are harder the further the moment of the ban is into the future. However, the classifier was most effective looking at the last 5 to 10 posts – it actually lost forecasting skill if you gave it more data. Fortunately, because trolling is a progressive condition that tends to get worse, scoring the last 10 comments on a rolling basis is a valid strategy.

Their algorithm, in the end, identified trolls with about 80% reliability. Very interestingly indeed, its performance didn’t suffer much if it was trained against normal below-the-line noise and then used on gamergate, or if it was trained against gamers and then used on libertarians (perhaps less of a surprise), or whatever. The authors argue that this is an indication that it’s picking up some kind of pondlife tao, an invariant essence of disruptive windbag.

The really interesting bit, though, was when they got to the feedback-loop between potential trolls, moderators, and the civilian population. You might think that being able to identify potential trolls within the first 5-10 comments presents the possibility of an early-intervention strategy. My own experience with Fistful of Euros back when it had 150-comment threads about the Middle East was that explicit early warnings – yellow cards – often worked. They found, however, that earlier and more aggressive intervention from moderators and other users was correlated with faster escalation. Specifically, those who had posts deleted early saw their readability index scores worsen rapidly, one of the strongest markers of trollness.

Now, you might say this doesn’t matter. Just stick the OtoMerala Super-Rapid 76 in automatic close-in defence mode and let the machines do the work!

But there’s a serious issue here and it’s our old pal, the Terroriser algorithm. They make the excellent point that 80% is pretty good but it’s a lot of false-positive results. Given that the principal components we mentioned above are basically conventional norms of discursive civility, there’s also the problem that our filter might be both racist and snobbish. The fact it worked well across dissimilar communities, though, is encouraging.

The distinction between fast and slow trolls – Hi-FBUs and Lo-FBUs in the paper – also suggests that there’s something going on here about different strategies of anti-social behaviour. Perhaps trolls with more cultural capital adopt strategies of disruption that allow them to persist longer and do more damage? More research, as they say, is needed. That said, I wouldn’t write off early intervention completely, and neither do the authors – the question may just be an optimisation.

Obviously what it needs now is an implementation.

Permalink

Back when I ran a forum, my treatment for trolls was to render their posts invisible to anyone except them and anyone else classed as a troll. It usually took them a while to realise what was going on 🙂

Call me a libertarian troll, but I don’t think it’s ethical to game people’s comments on journalism.

There are definitely too many false positives to be reliable, and we don’t want automatic software trying to tell people what they can and can’t post. Moderators do a much better job, and we can’t just censor lots of well-meaning people who may not have the best english skills.

i often get banned for trolling but a big part of the problems is i’m a superfast touch-typist with a top of the range keyboard, so i’m not pecking agonisingly slowly and rethinking my comments, i type as fast as i speak: i find touchscreens change what i write for said reason (can’t bear them, eyesight problems, touch is best for me)

Off topic – the maps on this page might make for some good map-blogging:

http://www.theguardian.com/politics/2015/may/09/election-polls-made-three-key-errors

Don’t know if this makes me a troll, at least by algorithm.

Permalink

Permalink